Software To Help You Turn Your Data Into AI

Forget fragmented workflows, annotation tools, and Notebooks for building AI applications. Encord Data Engine accelerates every step of taking your model into production.

Label data 10x faster & gain control of your training data, today.

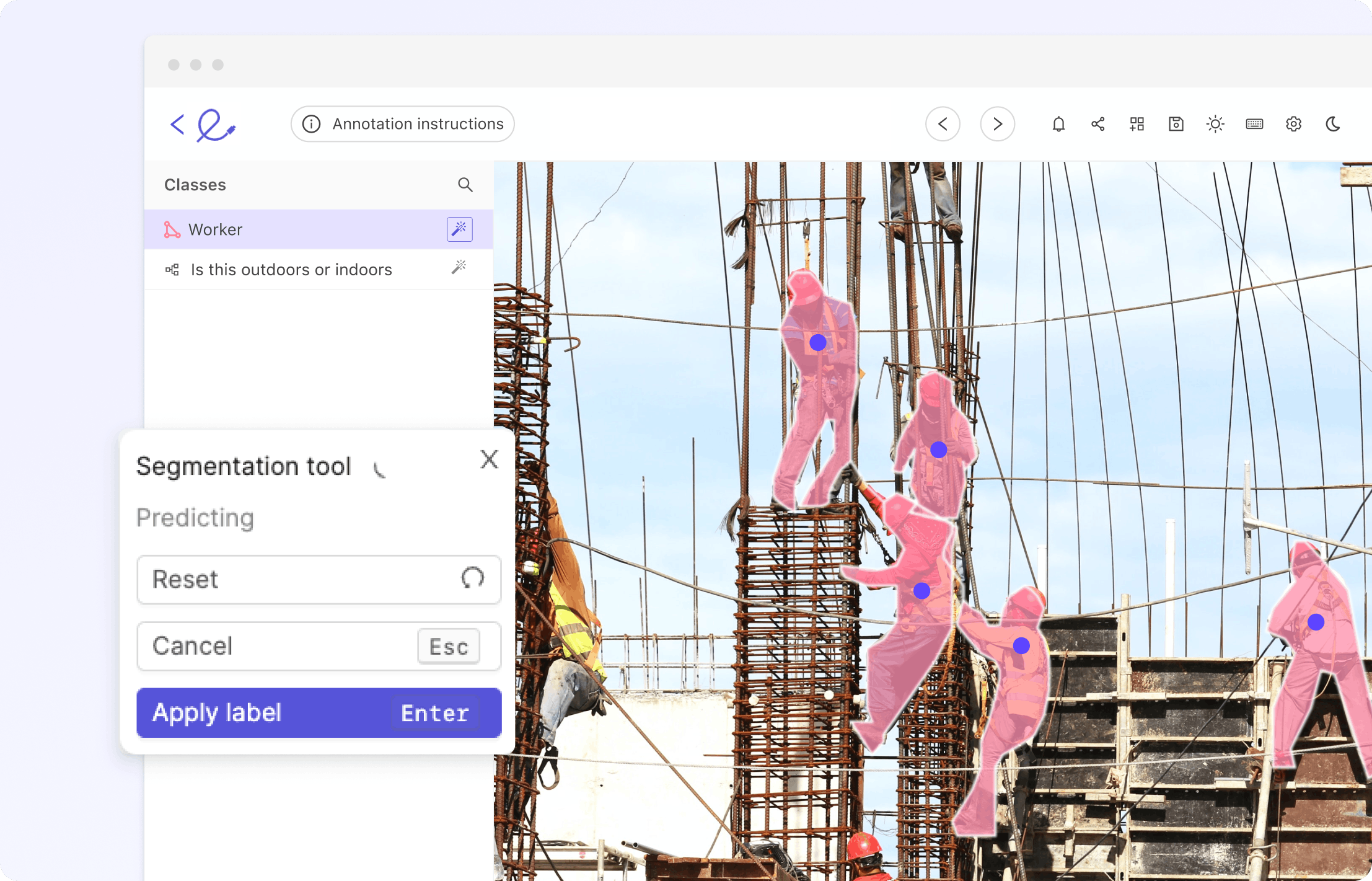

Computer vision is having its ChatGPT moment with the release of the Segment Anything Model (SAM) by Meta last week. Trained over 11 billion segmentation masks, SAM is a foundation model for predictive AI use cases rather than generative AI. While it has shown an incredible amount of flexibility in its ability to segment over wide-ranging image modalities and problem spaces, it was released without “fine-tuning” functionality. This tutorial will outline some of the key steps to fine-tune SAM using the mask decoder, particularly describing which functions from SAM to use to pre/post-process the data so that it's in good shape for fine-tuning. {{Training_data_CTA::Supercharge your annotations by fine-tuning SAM for your use case}} What is the Segment Anything Model (SAM)? The Segment Anything Model (SAM) is a segmentation model developed by Meta AI. It is considered the first foundational model for Computer Vision. SAM was trained on a huge corpus of data containing millions of images and billions of masks, making it extremely powerful. As its name suggests, SAM is able to produce accurate segmentation masks for a wide variety of images. SAM’s design allows it to take human prompts into account, making it particularly powerful for Human In The Loop annotation. These prompts can be multi-modal: they can be points on the area to be segmented, a bounding box around the object to be segmented, or a text prompt about what should be segmented. The model is structured into 3 components: an image encoder, a prompt encoder, and a mask decoder. Source The image encoder generates an embedding for the image being segmented, whilst the prompt encoder generates an embedding for the prompts. The image encoder is a particularly large component of the model. This is in contrast to the lightweight mask decoder, which predicts segmentation masks based on the embeddings. Meta AI has made the weights and biases of the model trained on the Segment Anything 1 Billion Mask (SA-1B) dataset available as a model checkpoint. {{light_callout_start}} Learn more about how Segment Anything works in our explainer blog post Segment Anything Model (SAM) Explained. {{light_callout_end}} What is Model Fine-Tuning? Publicly available state-of-the-art models have a custom architecture and are typically supplied with pre-trained model weights. If these architectures were supplied without weights then the models would need to be trained from scratch by the users, who would need to use massive datasets to obtain state-of-the-art performance. Model fine-tuning is the process of taking a pre-trained model (architecture+weights) and showing it data for a particular use case. This will typically be data that the model hasn’t seen before, or that is underrepresented in its original training dataset. The difference between fine-tuning the model and starting from scratch is the starting value of the weights and biases. If we were training from scratch, these would be randomly initialized according to some strategy. In such a starting configuration, the model would ‘know nothing’ of the task at hand and perform poorly. By using pre-existing weights and biases as a starting point we can ‘fine tune’ the weights and biases so that our model works better on our custom dataset. For example, the information learned to recognize cats (edge detection, counting paws) will be useful for recognizing dogs. Why Would I Fine-Tune a Model? The purpose of fine-tuning a model is to obtain higher performance on data that the pre-trained model has not seen before. For example, an image segmentation model trained on a broad corpus of data gathered from phone cameras will have mostly seen images from a horizontal perspective. If we tried to use this model for satellite imagery taken from a vertical perspective, it may not perform as well. If we were trying to segment rooftops, the model may not yield the best results. The pre-training is useful because the model will have learned how to segment objects in general, so we want to take advantage of this starting point to build a model that can accurately segment rooftops. Furthermore, it is likely that our custom dataset would not have millions of examples, so we want to fine-tune instead of training the model from scratch. Fine tuning is desirable so that we can obtain better performance on our specific use case, without having to incur the computational cost of training a model from scratch. How to Fine-Tune Segment Anything Model [With Code] Background & Architecture We gave an overview of the SAM architecture in the introduction section. The image encoder has a complex architecture with many parameters. In order to fine-tune the model, it makes sense for us to focus on the mask decoder which is lightweight and therefore easier, faster, and more memory efficient to fine-tune. In order to fine-tune SAM, we need to extract the underlying pieces of its architecture (image and prompt encoders, mask decoder). We cannot use SamPredictor.predict (link) for two reasons: We want to fine-tune only the mask decoder This function calls SamPredictor.predict_torch which has the @torch.no_grad() decorator (link), which prevents us from computing gradients Thus, we need to examine the SamPredictor.predict function and call the appropriate functions with gradient calculation enabled on the part we want to fine-tune (the mask decoder). Doing this is also a good way to learn more about how SAM works. Creating a Custom Dataset We need three things to fine-tune our model: Images on which to draw segmentations Segmentation ground truth masks Prompts to feed into the model We chose the stamp verification dataset (link) since it has data that SAM may not have seen in its training (i.e., stamps on documents). We can verify that it performs well, but not perfectly, on this dataset by running inference with the pre-trained weights. The ground truth masks are also extremely precise, which will allow us to calculate accurate losses. Finally, this dataset contains bounding boxes around the segmentation masks, which we can use as prompts to SAM. An example image is shown below. These bounding boxes align well with the workflow that a human annotator would go through when looking to generate segmentations. Input Data Preprocessing We need to preprocess the scans from numpy arrays to pytorch tensors. To do this, we can follow what happens inside SamPredictor.set_image (link) and SamPredictor.set_torch_image (link) which preprocesses the image. First, we can use utils.transform.ResizeLongestSide to resize the image, as this is the transformer used inside the predictor (link). We can then convert the image to a pytorch tensor and use the SAM preprocess method (link) to finish preprocessing. Training Setup We download the model checkpoint for the vit_b model and load them in: sam_model = sam_model_registry['vit_b'](checkpoint='sam_vit_b_01ec64.pth') We can set up an Adam optimizer with defaults and specify that the parameters to tune are those of the mask decoder: optimizer = torch.optim.Adam(sam_model.mask_decoder.parameters()) At the same time, we can set up our loss function, for example Mean Squared Error loss_fn = torch.nn.MSELoss() Training Loop In the main training loop, we will be iterating through our data items, generating masks, and comparing them to our ground truth masks so that we can optimize the model parameters based on the loss function. In this example, we used a GPU for training since it is much faster than using a CPU. It is important to use .to(device) on the appropriate tensors to make sure that we don’t have certain tensors on the CPU and others on the GPU. We want to embed images by wrapping the encoder in the torch.no_grad() context manager, since otherwise we will have memory issues, along with the fact that we are not looking to fine-tune the image encoder. with torch.no_grad(): image_embedding = sam_model.image_encoder(input_image) We can also generate the prompt embeddings within the no_grad context manager. We use our bounding box coordinates, converted to pytorch tensors. with torch.no_grad(): sparse_embeddings, dense_embeddings = sam_model.prompt_encoder( points=None, boxes=box_torch, masks=None, ) Finally, we can generate the masks. Note that here we are in single mask generation mode (in contrast to the 3 masks that are normally output). low_res_masks, iou_predictions = sam_model.mask_decoder( image_embeddings=image_embedding, image_pe=sam_model.prompt_encoder.get_dense_pe(), sparse_prompt_embeddings=sparse_embeddings, dense_prompt_embeddings=dense_embeddings, multimask_output=False, ) The final step here is to upscale the masks back to the original image size since they are low resolution. We can use Sam.postprocess_masks to achieve this. We will also want to generate binary masks from the predicted masks so that we can compare these to our ground truths. It is important to use torch functionals in order to not break backpropagation. upscaled_masks = sam_model.postprocess_masks(low_res_masks, input_size, original_image_size).to(device) from torch.nn.functional import threshold, normalize binary_mask = normalize(threshold(upscaled_masks, 0.0, 0)).to(device) Finally, we can calculate the loss and run an optimization step: loss = loss_fn(binary_mask, gt_binary_mask) optimizer.zero_grad() loss.backward() optimizer.step() By repeating this over a number of epochs and batches we can fine-tune the SAM decoder. Saving Checkpoints and Starting a Model from it Once we are done with training and satisfied with the performance uplift, we can save the state dict of the tuned model using: torch.save(model.state_dict(), PATH) We can then load this state dict when we want to perform inference on data that is similar to the data we used to fine-tune the model. {{light_callout_start}} You can find the Colab Notebook with all the code you need to fine-tune SAM here. Keep reading if you want a fully working solution out of the box! {{light_callout_end}} Fine-Tuning for Downstream Applications While SAM does not currently offer fine-tuning out of the box, we are building a custom fine-tuner integrated with the Encord platform. As shown in this post, we fine-tune the decoder in order to achieve this. This is available as an out-of-the-box one-click procedure in the web app, where the hyperparameters are automatically set. Original vanilla SAM mask: Mask generated by fine-tuned version of the model: We can see that this mask is tighter than the original mask. This was the result of fine-tuning on a small subset of images from the stamp verification dataset, and then running the tuned model on a previously unseen example. With further training and more examples, we could obtain even better results. Conclusion That's all, folks! You have now learned how to fine-tune the Segment Anything Model (SAM). If you're looking to fine-tune SAM out of the box, you might also be interested to learn that we have recently released the Segment Anything Model in Encord, allowing you to fine-tune the model without writing any code. {{SAM_CTA}}

Read morePhi-3 is a family of open artificial intelligence models developed by Microsoft. These models have quickly gained popularity for being the most capable and cost-effective small language models (SLMs) available. The Phi-3 models, including Phi-3-mini, are cost-effective and outperform models of the same size and even the next size across various benchmarks of language, reasoning, coding, and math. Let’s discuss how these models in detail. What are Small Language Models (SLM)? Small Language Models (SLMs) refer to scaled-down versions of large language models (LLMs) like OpenAI’s GPT, Meta’s LLama-3, Mistral 7B, etc. These models are designed to be more lightweight and efficient both in terms of computational resources for training and inference for simpler tasks and in their memory footprint. The “small” in SLMs refers to the number of parameters that the model has. These models are typically trained on a large corpus of high-quality data and learn to predict the next work in a sentence, which allows them to generate coherent and contextually relevant sentences. These lightweight AI models are typically used in scenarios where computational resources are limited or where real-time inference is necessary. They sacrifice some performance and capabilities compared to their larger counterparts but still provide valuable language understanding and generation capabilities. SLMs find applications in various fields such as mobile devices, IoT devices, edge computing, and scenarios that have low-latency interactions. They allow for more widespread deployment of natural language processing capabilities in resource-constrained environments. Microsoft's Phi-3 is a prime example of an SLM that pushes the boundaries of what's possible with these models, offering superior performance across various benchmarks while being cost-effective. Phi-3: Introducing Microsoft’s SLM Tech giant Microsoft launches Phi-3, a Small Language Model (SLM) designed to deliver great performance while remaining lightweight enough to run on resource-constrained devices like smartphones. With an impressive 3.8 billion parameters, Phi-3 represents a significant milestone in compact language modeling technology. Prioritizing techniques in dataset curation and model architecture, Phi-3 achieves competitive performance comparable to much larger models like Mixtral 8x7B and GPT-3.5. Performance Evaluation Phi-3's performance is assessed through rigorous evaluation against academic benchmarks and internal testing. Despite its smaller size, Phi-3 demonstrates impressive results, achieving 69% on the MMLU benchmark and 8.38 on the MT-bench metric. Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone When comparing the performance of Phi-3 with GPT-3.5, a Large Language Model (LLM), it's important to consider the tasks at hand. For many language, reasoning, coding, and math benchmarks, Phi-3 models have been shown to outperform models of the same size and those of the next size up, including GPT-3.5. Phi-3 Architecture Phi-3 is a transformer decoder architecture with a default context length of 4K, ensuring efficient processing of input data while maintaining context awareness. Phi-3 also offers a long context version, Phi-3-mini-128K, extending context length to 128K for handling tasks requiring broader context comprehension. With 32 heads and 32 layers, Phi-3 balances model complexity with computational efficiency, making it suitable for deployment on mobile devices. Microsoft Phi-3: Model Training Process The model training process for Microsoft's Phi-3 has a comprehensive approach: High-Quality Data Training Phi-3 is trained using high-quality data curated from various sources, including heavily filtered web data and synthetic data. This meticulous data selection process ensures that the model receives diverse and informative input to enhance its language understanding and reasoning capabilities. Extensive Post-training Post-training procedures play a crucial role in refining Phi-3's performance and ensuring its adaptability to diverse tasks and scenarios. Through extensive post-training techniques, including supervised fine-tuning and direct preference optimization, Phi-3 undergoes iterative improvement to enhance its proficiency in tasks such as math, coding, reasoning, and conversation. Reinforcement Learning from Human Feedback (RLHF) Microsoft incorporates reinforcement learning from human feedback (RLHF) into Phi-3's training regime. This mechanism allows the model to learn from human interactions, adapting its responses based on real-world feedback. RLHF enables Phi-3 to continuously refine its language generation capabilities, ensuring more contextually appropriate and accurate responses over time. If you are looking to integrate RLHF into your ML pipeline, read the blog Top Tools for RLHF to find the right tools for your project. Automated Testing Phi-3's training process includes rigorous automated testing procedures to assess model performance and identify potential areas for improvement. Automated testing frameworks enable efficient evaluation of Phi-3's functionality across various linguistic tasks and domains, facilitating ongoing refinement and optimization. Manual Red-teaming In addition to automated testing, Phi-3 undergoes manual red-teaming, wherein human evaluators systematically analyze model behavior and performance. This manual assessment process provides valuable insights into Phi-3's strengths and weaknesses, guiding further training iterations and post-training adjustments to enhance overall model quality and reliability. Advantages of Phi-3: SLM Vs. LLM Small Language Model (SLM), offers several distinct advantages over traditional Large Language Models (LLMs), highlighting its suitability for a variety of applications and deployment scenarios. Resource Efficiency: SLMs like Phi-3 are designed to be more resource-efficient compared to LLMs. With its compact size and optimized architecture, Phi-3 consumes fewer computational resources during both training and inference, making it ideal for deployment on resource-constrained devices such as smartphones and IoT devices. Size and Flexibility: Phi-3-mini, a 3.8B language model, is available in two context-length variants—4K and 128K tokens1. It is the first model in its class to support a context window of up to 128K tokens, with little impact on quality. Instruction-tuned: Phi-3 models are instruction-tuned, meaning that they’re trained to follow different types of instructions reflecting how people normally communicate. Scalability: SLMs like Phi-3 offer greater scalability compared to LLMs. Their reduced computational overhead allows for easier scaling across distributed systems and cloud environments, enabling seamless integration into large-scale applications with high throughput requirements. Optimized for Various Platforms: Phi-3 models have been optimized for ONNX Runtime with support for Windows DirectML along with cross-platform support across GPU, CPU, and even mobile hardware. While LLMs will still be the gold standard for solving many types of complex tasks, SLMs like Phi-3 offer many of the same capabilities found in LLMs but are smaller in size and are trained on smaller amounts of data. For more information about the Phi-3 models, read the technical report Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone. Quality Vs. Model Size Comparison In the trade-off between model size and performance quality, Phi-3 claims remarkable efficiency and effectiveness compared to larger models. Performance Parity Despite its smaller size, Phi-3 achieves performance parity with larger LLMs such as Mixtral 8x7B and GPT-3.5. Through innovative training methodologies and dataset curation, Phi-3 delivers competitive results on benchmark tests and internal evaluations, demonstrating its ability to rival larger models in terms of language understanding and generation capabilities. Optimized Quality Phi-3 prioritizes dataset quality optimization within its constrained parameter space, leveraging advanced training techniques and data selection strategies to maximize performance. By focusing on the quality of data and training processes, Phi-3 achieves impressive results that are comparable to, if not surpass, those of larger LLMs. Efficient Utilization Phi-3 shows efficient utilization of model parameters, demonstrating that superior performance can be achieved without exponentially increasing model size. By striking a balance between model complexity and resource efficiency, Phi-3 sets a new standard for small-scale language modeling, offering a compelling alternative to larger, more computationally intensive models. Quality of Phi-3 models’s performance on MMLU benchmark compared to other models of similar size Client Success Case Study Organizations like ITC, a leading business in India, are already using Phi-3 models to drive efficiency in their solutions. ITC's collaboration with Microsoft on the Krishi Mitra copilot, a farmer-facing app, showcases the practical impact of Phi-3 in agriculture. By integrating fine-tuned versions of Phi-3, ITC aims to improve efficiency while maintaining accuracy, ultimately enhancing the value proposition of their farmer-facing application. For more information, read the blog Generative AI in Azure Data Manager for Agriculture. Limitations of Phi-3 The limitations of Phi-3, despite its impressive capabilities, are primarily from its smaller size compared to larger Language Models (LLMs): Limited Factual Knowledge Due to its limited parameter space, Phi-3-mini may struggle with tasks that require extensive factual knowledge, as evidenced by lower performance on benchmarks like TriviaQA. The model's inability to store vast amounts of factual information poses a challenge for tasks reliant on deep factual understanding. Language Restriction Phi-3-mini primarily operates within the English language domain, which restricts its applicability in multilingual contexts. While efforts are underway to explore multilingual capabilities, such as with Phi-3-small and the inclusion of more multilingual data, extending language support remains an ongoing challenge. Dependency on External Resources To compensate for its capacity limitations, Phi-3-mini may rely on external resources, such as search engines, to augment its knowledge base for certain tasks. While this approach can alleviate some constraints, it introduces dependencies and may not always guarantee optimal performance. Challenges in Responsible AI (RAI) Like many LLMs, Phi-3 faces challenges related to responsible AI practices, including factual inaccuracies, biases, inappropriate content generation, and safety concerns. Despite diligent efforts in data curation, post-training refinement, and red-teaming, these challenges persist and require ongoing attention and mitigation strategies. For more information on Microsoft’s responsible AI practices, read Microsoft Responsible AI Standard, v2. Phi-3 Availability The first model in this family, Phi-3-mini, a 3.8B language model, is now available. It is available in two context-length variants—4K and 128K tokens. The Phi-3-mini is available on Microsoft Azure AI Model Catalog, Hugging Face, and Ollama. It has been optimized for ONNX Runtime with support for Windows DirectML along with cross-platform support across graphics processing unit (GPU), CPU, and even mobile hardware. In the coming weeks, additional models will be added to the Phi-3 family to offer customers even more flexibility across the quality-cost curve Phi-3-small (7B) and Phi-3-medium (14B) will be available in the Azure AI model catalog, and other model families shortly. It will also be available as an NVIDIA NIM microservice with a standard API interface that can be deployed anywhere. Phi-3: Key Takeaways Microsoft's Phi-3 models are small language models (SLMs) designed for efficiency and performance, boasting 3.8 billion parameters and competitive results compared to larger models. Phi-3 utilizes high-quality curated data and advanced post-training techniques, including reinforcement learning from human feedback (RLHF), to refine its performance. Its transformer decoder architecture ensures efficiency and context awareness. Phi-3 offers resource efficiency, scalability, and flexibility, making it suitable for deployment on resource-constrained devices. Despite its smaller size, it achieves performance parity with larger models through dataset quality optimization and efficient parameter utilization. While Phi-3 demonstrates impressive capabilities, limitations include limited factual knowledge and language support. It is currently available in its first model, Phi-3-mini, with plans for additional models to be added, offering more options across the quality-cost curve.

April 25

8 min

Here’s a scenario you’ve likely encountered: You spent months building your model, increased your F1 score above 90%, convinced all stakeholders to launch it, and... poof! As soon as your model sees real-world data, its performance drops below what you expected. This is a common production machine learning (ML) problem for many teams—not just yours. It can also be a very frustrating experience for computer vision (CV) engineers, ML teams, and data scientists. There are many potential factors behind these. Problems could stem from the quality of the production data, the design of the production pipelines, the model itself, or operational hurdles the system faces in production. In this article, you will learn the four (4) reasons why computer vision models fail in production and thoroughly examine the ML lifecycle stages where they occur. These reasons show you the most common production CV and data science problems. Knowing their causes may help you prevent, mitigate, or fix them. You’ll also see the various strategies for addressing these problems at each step. Let’s jump right into it! Why do Models Fail in Production? The ML lifecycle governs how ML models are developed and shipped; it involves sourcing data, data exploration and preparation (data cleaning and EDA), model training, and model deployment, where users can consume the model predictions. These processes are interdependent, as an error in one stage could affect the corresponding stages, resulting in a model that doesn’t perform well—or completely fails—in production. Organizations develop machine learning (ML) and artificial intelligence (AI) models to add value to their businesses. When errors occur at any ML development stage, they can lead to production models failing, costing businesses capital, human resources, and opportunities to satisfy customer expectations. Consider the implications of poorly labeling data for a CV model after data collection. Or the model has an inherent bias—it could invariably affect results in a production environment. It is noteworthy that the problem can start when businesses do not have precise reasons or objectives for developing and deploying machine learning models, which can cripple the process before it begins. Assuming the organization has passed all stages and deployed its model, the errors we often see that lead to models failing in production include: Mislabeling data, which can train models on incorrect information. ML engineers and CV teams that prioritize data quality only at later stages rather than as a foundational practice. Ignoring the drift in data distribution over time can make models outdated or irrelevant. Implementing minimal or no validation (quality assurance) steps risks unnoticed errors progressing to production. Viewing model deployment as the final goal, neglecting necessary ongoing monitoring and adjustments. Let’s look deeper at these errors and why they are the top reasons we see production models fail. Reason #1: Data Labeling Errors Data labeling is the foundation for training machine learning models, particularly supervised learning, where models learn patterns directly from labeled data. This involves humans or AI systems assigning informative labels to raw data—whether it be images, videos, or DICOM—to provide context that enables models to learn. AI algorithms also synthesize labeled data. Check out our guide on synthetic data and why it is useful. Despite its importance, data labeling is prone to errors, primarily because it often relies on human annotators. These errors can compromise a model's accuracy by teaching it incorrect patterns. Consider a scenario in a computer vision project to identify objects in images from data sources. Even a small percentage of mislabeled images can lead the model to associate incorrect features with an object. This could mean the model makes wrong predictions in production. Potential Solution: Automated Labeling Error Detection A potential solution is adopting tools and frameworks that automatically detect labeling errors. These tools analyze labeling patterns to identify outliers or inconsistent labels, helping annotators revise and refine the data. An example is Encord Active. Encord Active is one of three products in the Encord platform (the others are Annotate and Index) that includes features to find failure modes in your data, labels, and model predictions. A common data labeling issue is the border closeness of the annotations. Training data with many border-proximate annotations can lead to poor model generalization. If a model is frequently exposed to partially visible objects during training, it might not perform well when presented with fully visible objects in a deployment scenario. This can affect the model's accuracy and reliability in production. Let’s see how Encord Active can help you, for instance, identify border-proximate annotations. Step 1: Select your Project. Step 2: Under the “Explorer” dashboard, find the “Labels” tab. Encord Active automatically finds patterns in the data and labels to surface potential issues with the label. Step 3: On the right pane, click on one of the issues EA found to filter your data and labels by it. In this case, “Border Closeness”; click on it. “Relative Area.” - Identifies annotations that are too close to image borders. Images with a Border Proximity score of 1 are flagged as too close to the border. Step 4: Select one of the images to inspect and validate the issue. Here’s a GIF with the steps: You will notice that EA also shows you the model’s predictions alongside the annotations, so you can visually inspect the annotation issue and resulting prediction. Step 5: Visually inspect the top images EA flags and use the Collections feature to curate them. There are a few approaches you could take after creating the Collections: Exclude the images that are border-proximate from the training data if the complete structure of the object is crucial for your application. This prevents the model from learning from incomplete data, which could lead to inaccuracies in object detection. Send the Collection to annotators for review. Recommended Read: 5 Ways to Improve the Quality of Labeled Data. Reason #2: Poor Data Quality The foundation of any ML model's success lies in the quality of the data it's trained on. High-quality data is characterized by its accuracy, completeness, timeliness, and relevance to the business problem ("fit for purpose"). Several common issues can compromise data quality: Duplicate Images: They can artificially increase the frequency of particular features or patterns in the training data. This gives the model a false impression of these features' importance, causing overfitting. Noise in Images: Blur, distortion, poor lighting, or irrelevant background objects can mask important image features, hindering the model's ability to learn and recognize relevant patterns. Unrepresentative Data: When the training dataset doesn't accurately reflect the diversity of real-world scenarios, the model can develop biases. For example, a facial recognition system trained mainly on images of people with lighter skin tones may perform poorly on individuals with darker skin tones. Limited Data Variation: A model trained on insufficiently diverse data (including duplicates and near-duplicates) will struggle to adapt to new or slightly different images in production. For example, if a self-driving car system is trained on images taken in sunny weather, it might fail in rainy or snowy conditions. Potential Solution: Data Curation One way to tackle poor data quality, especially after collection, is to curate good quality data. Here is how to use Encord Active to automatically detect and classify duplicates in your set. Curate Duplicate Images Your testing and validation sets might contain duplicate training images that inflate the performance metrics. This makes the model appear better than it is, which could lead to false confidence about its real-world capabilities. Step 1: Navigate to the Explorer dashboard → Data tab On the right-hand pane, you will notice Encord Active has automatically detected common data quality issues based on the metrics it computed from the data. See an overview of the issues EA can detect on this documentation page. Step 2: Under the issues found, click on Duplicates to see the images EA flags as duplicates and near-duplicates with uniqueness scores of 0.0 to 0.00001. There are two steps you could take to solve this issue: Carefully remove duplicates, especially when dealing with imbalanced datasets, to avoid skewing the class distribution further. If duplicates cannot be fully removed (e.g., to maintain the original distribution of rare cases), use data augmentation techniques to introduce variations within the set of duplicates themselves. This can help mitigate some of the overfitting effects. Step 3: Under the Data tab, curate duplicates you want to remove or use augmentation techniques to improve by selecting them. Click Add to a Collection → Name the collection ‘Duplicates’ and add a description. See the complete steps: Once the duplicates are in the Collection, you can use the tag to filter them out of your training or validation data. If relevant, you can also create a new dataset to apply the data augmentation techniques. Other solutions could include: Implement Robust Data Validation Checks: Use automated tools that continuously validate data accuracy, consistency, and completeness at the entry point (ingestion) and throughout the data pipeline. Adopt a Centralized Data Management Platform: A unified view of data across sources (e.g., data lakes) can help identify discrepancies early and simplify access for CV engineers (or DataOps teams) to maintain data integrity. See Also: Improving Data Quality Using End-to-End Data Pre-Processing Techniques in Encord Active. Reason #3: Data Drift Data drift occurs when the statistical properties of the real-world images a model encounters in production change over time, diverging from the samples it was trained on. Drift can happen due to various factors, including: Concept Drift: The underlying relationships between features and the target variable change. For example, imagine a model trained to detect spam emails. The features that characterize spam (certain keywords, sender domains) can evolve over time. Covariate Shift: The input feature distribution changes while the relationship to the target variable remains unchanged. For instance, a self-driving car vision system trained in summer might see a different distribution of images (snowy roads, different leaf colors) in winter. Prior Probability Shift: The overall frequency of different classes changes. For example, a medical image classification model trained for a certain rare disease may encounter it more frequently as its prevalence changes in the population. If you want to dig deeper into the causes of drifts, check out the “Data Distribution Shifts and Monitoring” article. Potential Solution: Monitoring Data Drift There are two steps you could take to address data drift: Use tools that monitor the model's performance and the input data distribution. Look for shifts in metrics and statistical properties over time. Collect new data representing current conditions and retrain the model at appropriate intervals. This can be done regularly or triggered by alerts when significant drift is detected. You can achieve both within Encord: Step 1: Create the Dataset on Annotate to log your input data for training or production. If your data is on a cloud platform, check out one of the data integrations to see if it works with your stack. Step 2: Create an Ontology to define the structure of the dataset. Step 3: Create an Annotate Project based on your dataset and the ontology. Ensure the project also includes Workflows because some features in Encord Active only support projects that include workflows. Step 4: Import your Annotate Project to Active. This will allow you to import the data, ground truth, and any custom metrics to evaluate your data quality. See how it’s done in the video tutorial on the documentation. Step 5: Select the Project → Import your Model Predictions. There are two steps to inspect the issues with the input data: Use the analytics view to get a statistical summary of the data. Use the issues found by Encord Active to manually inspect where your model is struggling. Step 6: On the Explorer dashboard → Data tab → Analytics View. Step 7: Under the Metric Distribution chart, select a quality metric to assess the distribution of your input data on. In this example, “Diversity" applies algorithms to rank images from easy to hard samples to annotate. Easy samples have lower scores, while hard samples have higher scores. Step 8: On the right-hand pane, click on Dark. Navigate back to Grid View → Click on one of the images to inspect the ground truth (if available) vs. model predictions. Observe that the poor lightning could have caused the model to misidentify the toy bear as a person. (Of course, other reasons, such as class imbalance, could cause the model to misclassify the object.) You can inspect the class balance on the Analytics View → Class Distribution chart. Nice! Recommended Read: How to Detect Data Drift on Datasets. There are other ways to manage data drift, including the following approaches: Adaptive Learning: Consider online learning techniques where the model continuously updates itself based on new data without full retraining. Note that this is still an active area of research with challenges in computer vision. Domain Adaptation: If collecting substantial amounts of labeled data from the new environment is not feasible, use domain adaptation techniques to bridge the gap between the old and new domains. Recommended Read:A Practical Guide to Active Learning for Computer Vision. Reason #4: Thinking Deployment is the Final Step (No Observability) Many teams mistakenly treat deployment as the finish line, which is one reason machine learning projects fail in production. However, it's crucial to remember that this is simply one stage in a continuous cycle. Models in production often degrade over time due to factors such as data drift (changes in input data distribution) or model drift (changes in the underlying relationships the model was trained on). Neglecting post-deployment maintenance invites model staleness and eventual failure. This is where MLOps (Machine Learning Operations) becomes essential. MLOps provides practices and technologies to monitor, maintain, and govern ML systems in production. Potential Solution: Machine Learning Operations (MLOps) The core principle of MLOps is ensuring your model provides continuous business value while in production. How teams operationalize ML varies, but some key practices include: Model Monitoring: Implement monitoring tools to track performance metrics (accuracy, precision, etc.) and automatically alert you to degradation. Consider a feedback loop to trigger retraining processes where necessary, either for real-time or batch deployment. Logging: Even if full MLOps tools aren't initially feasible, start by logging model predictions and comparing them against ground truth, like we showed above with Encord. This offers early detection of potential issues. Management and Governance: Establish reproducible ML pipelines for continuous training (CT) and automate model deployment. From the start, consider regulatory compliance issues in your industry. Recommended Read:Model Drift: Best Practices to Improve ML Model Performance. Key Takeaways: 4 Reasons Computer Vision Models Fail in Production Remember that model deployment is not the last step. Do not waste time on a model only to have it fail a few days, weeks, or months later. ML systems differ across teams and organizations, but most failures are common. If you study your ML system, you’ll likely see that some of the reasons your model fails in production are similar to those listed in this article: 1. Data labelling errors 2. Poor data quality 3. Data drift in production 4. Thinking deployment is the final step The goal is for you to understand these failures and learn the best practices to solve or avoid them. You’d also realize that while most failure modes are data-centric, others are technology-related and involve team practices, culture, and available resources.

April 24

8 min

At Encord we continually look for ways to enable our customers to bring their models to market faster. Today, we’re announcing the launch of Video Data Management within the Encord Platform, providing an entirely new way to interact with video data. Gone are the days of searching frame by frame for the relevant clip. Now filter and search across your entire dataset of videos with just a few clicks. What is Advanced Video Curation? In our new video explorer page users can search, filter, and sort entire datasets of videos. Video-level metrics, calculated by taking an average from the frames of a video, allow the user to curate videos based on a range of characteristics, including average brightness, average sharpness, the number of labeled frames, and many more. Users can also curate within individual videos with the new video frame analytics timelines, enabling a temporal view over the entire video. We're thrilled that Video Data Curation in the Encord platform is the first and only platform available to search, query, and curate relevant video clips as part of your data workflows. Support within Encord This is now available for all Encord Active customers. Please see our documentation for more information on activating this tool. For any questions on how to get access to video curation please contact sales@encord.com.

April 24

2 min

What is overfitting in computer vision? Overfitting is a significant issue in computer vision where model learns the training data too well, including noise and irrelevant details. This leads to poor performance on new unseen data even if the model performs too well on training data. Overfitting occurs when the model memorizes specific patterns in the training images instead of learning general features. Overfit models have extremely high accuracy on the training data but much lower accuracy on testing data, failing to generalize well. Complex models with many parameters are more prone to overfitting, especially with limited training data. In this blog, we will learn about What is the difference between overfitting and underfitting? How to find out if the model is overfitting? What to do if the model is overfitting? and how to use tools like Encord Active to detect and avoid overfitting. Overfitting Vs Underfitting: Key Differences Performance on training data: Overfitting leads to very high training accuracy while underfitting results in low training accuracy. Performance on test/validation data: Overfitting causes poor performance on unseen data, while underfitting also performs poorly on test/validation data. Model complexity: Overfitting is caused by excessive model complexity while underfitting is due to oversimplified models. Generalization: Overfitting models fail to generalize well, while underfit models cannot capture the necessary patterns for generalization. Bias-variance trade-off: Overfitting has high variance and low bias, while underfitting has high bias and low variance. Overfitting and Underfitting: Key Statistical Terminologies When training a machine learning model you are always trying to strike a balance between capturing the underlying patterns in the data while avoiding overfitting or underfitting. Here is a brief overview of the key statistical concepts which are important to understand for us to improve model performance and generalization. Data Leakage Data leakage occurs when information from outside the training data is used to create the model. This can lead to a situation where the model performs exceptionally well on training data but poorly on unseen data. This can happen when data preprocessing steps, such as feature selection or data imputation, are performed using information from the entire dataset, including the test set. Bias Bias refers to the error introduced by approximating a real-world problem with a simplified model. A high bias model needs to be more accurate in order to capture the underlying patterns in the data, which leads to underfitting. Addressing bias involves increasing model complexity or using more informative features. For more information on how to address bias, read the blog How To Mitigate Bias in Machine Learning Models. Variance Variance is a measure of how much the model’s predictions fluctuate for different training datasets. A high variance model is overly complex and sensitive to small fluctuations in the training data and captures noise in the training dataset. This leads to overfitting and the machine learning model performs poorly on unseen data. Bias-variance tradeoff The bias-variance tradeoff illustrates the relationship between bias and variance in the model performance. Ideally, you would want to choose a model that both accurately captures the patterns in the training data, but also generalize well to unseen data. Unfortunately, it is typically impossible to do both simultaneously. High-variance learning methods may be able to represent their training dataset well but are at risk of overfitting to noisy or unrepresented training data. In contrast, algorithms with a high bias typically produce simpler models that don’t tend to overfit but may underfit their training data, failing to capture the patterns in the dataset. Bias and variance are one of the fundamental concepts of machine learning. If you want to understand better with visualization, watch the video below. Bootstrap Bootstrapping is a statistical technique that involves resampling the original dataset with replacement to create multiple subsets or bootstrap samples. These bootstrap samples are then used to train multiple models, allowing for the estimation of model performance metrics, such as bias and variance, as well as confidence intervals for the model's predictions. K-Fold Cross-Validation K-Fold Cross-Validation is another resampling technique used to estimate a model's performance and generalization capability. The dataset is partitioned into K equal-sized subsets (folds). The model is trained on K-1 folds and evaluated on the remaining fold. This process is repeated K times, with each fold serving as the validation set once. The final performance metric is calculated as the average across all K iterations. LOOCV (Leave-One-Out Cross-Validation) Leave-One-Out Cross-Validation (LOOCV) is a special case of K-Fold Cross-Validation, where K is equal to the number of instances in the dataset. In LOOCV, the model is trained on all instances except one, and the remaining instance is used for validation. This process is repeated for each instance in the dataset, and the performance metric is calculated as the average across all iterations. LOOCV is computationally expensive but can provide a reliable estimate of model performance, especially for small datasets. Here is an amazing video by Josh Starmer explaining cross-validation. Watch it for more information. Assessing Model Fit Residual Analysis Residuals are the differences between the observed values and the values predicted by the model. Residual analysis involves examining the patterns and distributions of residuals to identify potential issues with the model fit. Ideally, residuals should be randomly distributed and exhibit no discernible patterns or trends. Structured patterns in the residuals may indicate that the model is missing important features or violating underlying assumptions. Goodness-of-Fit Tests Goodness-of-fit tests provide a quantitative measure of how well the model's predictions match the observed data. These tests typically involve calculating a test statistic and comparing it to a critical value or p-value to determine the significance of the deviation between the model and the data. Common goodness-of-fit tests include: Chi-squared test Kolmogorov-Smirnov test Anderson-Darling test The choice of test depends on the assumptions about the data distribution and the type of model being evaluated. Evaluation Metrics Evaluation metrics are quantitative measures that summarize the performance of a model on a specific task. Different metrics are appropriate for different types of problems, such as regression, classification, or ranking. Some commonly used evaluation metrics include: For regression problems: Mean Squared Error (MSE), Root Mean Squared Error (RMSE), R-squared (R²) For classification problems: Accuracy, Precision, Recall, F1-score, Area Under the Receiver Operating Characteristic Curve (AUROC) Diagnostic Plots Diagnostics plots, such as residual plots, quantile-quantile (Q-Q) plots, and calibration plots, can provide valuable insights into model fit. These graphical representations can help identify patterns, outliers, and deviations from the expected distributions, complementing the quantitative assessment of model fit. Causes for Overfitting in Computer Vision Here are the following causes for overfitting in computer vision: High Model Complexity Relative to Data Size One of the primary causes of overfitting is when the model's complexity is disproportionately high compared to the size of the training dataset. Deep neural networks, especially those used in computer vision tasks, often have millions or billions of parameters. If the training data is limited, the model can easily memorize the training examples, including their noise and peculiarities, rather than learning the underlying patterns that generalize well to new data. Noise Training Data Image or video datasets, particularly those curated from real-world scenarios, can contain a significant amount of noise, such as variations in lighting, occlusions, or irrelevant background clutter. If the training data is noisy, the model may learn to fit this noise instead of focusing on the relevant features. Insufficient Regularization Regularization techniques, such as L1 and L2 regularization, dropout, or early stopping, are essential for preventing overfitting in deep learning models. These techniques introduce constraints or penalties that discourage the model from learning overly complex patterns that are specific to the training data. With proper regularization, models can easily fit, especially when dealing with high-dimensional image data and deep network architectures. Data Leakage Between Training/Validation Sets Data leakage occurs when information from the test or validation set is inadvertently used during the training process. This can happen due to improper data partitioning, preprocessing steps that involve the entire dataset, or other unintentional sources of information sharing between the training and evaluation data. Even minor data leakage can lead to overly optimistic performance estimates and a failure to generalize to truly unseen data. How to Detect an Overfit Model? Here are some common techniques to detect an overfit model: Monitoring the Training and Validation/Test Error During the training process, track the model’s performance on both the training and validation/test datasets. If the training error continues to decrease while the validation/test error starts to increase or plateau, a strong indication of overfitting. An overfit model will have a significantly lower training error compared to the validation/test error. Learning Curves Plot learning curves that show the training and validation/test error as a function of the training set size. If the training error continues to decrease while the validation/test error remains high or starts to increase as more data is added, it suggests overfitting. An overfit model will have a large gap between the training and validation/test error curves. Cross-Validation Perform k-fold cross-validation on the training data to get an estimate of the model's performance on unseen data. If the cross-validation error is significantly higher than the training error, it may indicate overfitting. Regularization Apply regularization techniques, such as L1 (Lasso) or L2 (Ridge) regularization, dropout, or early stopping. If adding regularization significantly improves the model's performance on the validation/test set while slightly increasing the training error, it suggests that the original model was overfitting. Model Complexity Analysis Examine the model's complexity, such as the number of parameters or the depth of a neural network. A highly complex model with a large number of parameters or layers may be more prone to overfitting, especially when the training data is limited. Visualization For certain types of models, like decision trees or neural networks, visualizing the learned representations or decision boundaries can provide insights into overfitting. If the model has overly complex decision boundaries or representations that appear to fit the training data too closely, it may be an indication of overfitting. Ways to Avoid Overfitting in Computer Vision Data Augmentation Data augmentation techniques, such as rotation, flipping, scaling, and translation, can be applied to the training dataset to increase its diversity and variability. This helps the model learn more robust features and prevents it from overfitting to specific data points. Observe and Monitor the Class Distributions of Annotated Samples During annotation, observe class distributions in the dataset. If certain classes are underrepresented, use active learning to prioritize labeling unlabeled samples from those minority classes. Encord Active can help find similar images or objects to the underrepresented classes, allowing you to prioritize labeling them, thereby reducing data bias. Finding similar images in Encord Active. Early Stopping Early stopping is a regularization technique that involves monitoring the model's performance on a validation set during training. If the validation loss stops decreasing or starts to increase, it may indicate that the model is overfitting to the training data. In such cases, the training process can be stopped early to prevent further overfitting. Dropout Dropout is another regularization technique that randomly drops (sets to zero) a fraction of the activations in a neural network during training. This helps prevent the model from relying too heavily on any specific set of features and encourages it to learn more robust and distributed representations. L1 and L2 Regularization L1 and L2 regularization techniques add a penalty term to the loss function, which discourages the model from having large weights. This helps prevent overfitting by encouraging the model to learn simpler and more generalizable representations. Transfer Learning Transfer learning involves using a pre-trained model on a large dataset (e.g., ImageNet) as a starting point for training on a new, smaller dataset. The pre-trained model has already learned useful features, which can help prevent overfitting and improve generalization on the new task. Ensemble Methods Ensemble methods, such as bagging (e.g., random forests) and boosting (e.g., AdaBoost), combine multiple models to make predictions. These techniques can help reduce overfitting by averaging out the individual biases and errors of the component models. For more information, read the blog What is Ensemble Learning? Model Evaluation Regularly monitoring the model's performance on a held-out test set and evaluating its generalization capabilities is essential for detecting and addressing overfitting issues. Using Encord Active to Reduce Model Overfitting Encord Active is a comprehensive platform offering features to curate a dataset that can help reduce the model overfitting and evaluate the model’s performance to identify and address any potential issues. Here are a few of the ways Encord Active can be used to reduce model overfitting: Evaluating Training Data with Data and Label Quality Metrics Encord Active allows users to assess the quality of their training data with data quality metrics. It provides metrics such as missing values, data distribution, and outliers. By identifying and addressing data anomalies, practitioners can ensure that their dataset is robust and representative. Encord Active also allows you to ensure accurate and consistent labels for your training dataset. The label quality metrics, along with the label consistency checks and label distribution analysis help in finding noise or anomalies which contribute to overfitting. Evaluating Model Performance with Model Quality Metrics After training a model, it’s essential to evaluate its performance thoroughly. Encord Active provides a range of model quality metrics, including accuracy, precision, recall, F1-score, and area under the receiver operating characteristic curve (AUC-ROC). These metrics help practitioners understand how well their model generalizes to unseen data and identify the data points which contribute to overfitting. Active Learning Workflow Overfitting often occurs when models are trained on insufficient or noisy data. Encord Active incorporates active learning techniques, allowing users to iteratively select the most informative samples for labeling. By actively choosing which data points to label, practitioners can improve model performance while minimizing overfitting.

April 19

8 min

Multimodal deep learning is a recent trend in artificial intelligence (AI) that is revolutionizing how machines understand the real world using multiple data modalities, such as images, text, video, and audio. In particular, multiple machine learning frameworks are emerging that exploit visual representations to infer textual descriptions following Open AI’s introduction of the Contrastive Language-Image Pre-Training (CLIP) model. The improved models use more complex datasets to change the CLIP framework for use cases that are specific to the domain. They also have better state-of-the-art (SoTA) generalization performance than the models that came before them. This article discusses the benefits, challenges, and alternatives of Open AI CLIP to help you choose a model for your specific domain. The list below mentions the architectures covered: Pubmed CLIP PLIP SigLIP Street CLIP Fashion CLIP CLIP-Rscid BioCLIP CLIPBert Open AI CLIP Model CLIP is an open-source vision-language AI model by OpenAI trained using image and natural language data to perform zero-shot classification tasks. Users can provide textual captions and use the model to assign a relevant label to the query image. Open AI CLIP Model: Architecture and Development The training data consists of images from the internet and 32,768 text snippets assigned to each image as its label. The training task involves using natural language processing (NLP) to predict which label goes with which image by understanding visual concepts and relating them to the textual data. CLIP Architecture The model primarily uses an image and a text encoder that convert images and labels into embeddings. Optimization involves minimizing a contrastive loss function by computing similarity scores between these embeddings and associating the correct label with an image. See Also: What is Vector Similarity Search? Once trained, the user can provide an unseen image as input with multiple captions to the image and text encoders. CLIP will then predict the correct label that goes with the image. Benefits of OpenAI CLIP OpenAI CLIP has multiple benefits over traditional vision models. The list below mentions the most prominent advantages: Zero-shot Learning (ZSL): CLIP’s training approach allows it to label unseen images without requiring expensive training on new datasets. Like Generative Pre-trained Transformer - 3 (GPT-3) and GPT-4, CLIP can perform zero-shot classification tasks using natural language data with minimal training overhead. The property also helps users fine-tune CLIP more quickly to adapt to new tasks. Better Real-World Performance: CLIP demonstrates better real-world performance than traditional vision models, which only work well with benchmark datasets. Limitations of OpenAI CLIP Although CLIP is a robust framework, it has a few limitations, as highlighted below: Poor Performance on Fine-grained Tasks: CLIP needs to improve its classification performance for fine-grained tasks such as distinguishing between car models, animal species, flower types, etc. Out-of-Distribution Data: While CLIP performs well on data with distributions similar to its training set, performance drops when it encounters out-of-distribution data. The model requires more diverse image pre-training to generalize to entirely novel tasks. Inherent Social Bias: The training data used for CLIP consists of randomly curated images with labels from the internet. The approach implies the model learns intrinsic biases present in image captions as the image-text pairs do not undergo filtration. Due to these limitations, the following section will discuss a few alternatives for domain-specific tasks. Learn how to build visual search engines with CLIP and ChatGPT in our on-demand webinar. Alternatives to CLIP Since CLIP’s introduction, multiple vision-language algorithms have emerged with unique capabilities for solving problems in healthcare, fashion, retail, etc. We will discuss a few alternative models that use the CLIP framework as their base. We will also briefly mention their architecture, development approaches, performance results, and use cases. 1. PubmedCLIP PubmedCLIP is a fine-tuned version of CLIP for medical visual question-answering (MedVQA), which involves answering natural language questions about an image containing medical information. PubmedCLIP: Architecture and Development The model is pre-trained on the Radiology Objects in Context (ROCO) dataset, which consists of 80,000 samples with multiple image modalities, such as X-ray, fluoroscopy, mammography, etc. The image-text pairs come from Pubmed articles; each text snippet briefly describes the image’s content. PubmedCLIP Architecture Pre-training includes fine-tuning CLIP’s image and text encoders to minimize contrastive language and vision loss. The pretrained module, PubMedCLIP, and a Convolutional Denoising Image Autoencoder (CDAE) encode images. A question encoder converts natural language questions into embeddings and combines them with the encoded image through a bilinear attention network (BAN). The training objective is to map the embeddings with the correct answer by minimizing answer classification and image reconstruction loss using a CDAE decoder. Performance Results of PubmedCLIP The accuracy metric shows an improvement of 1% compared to CLIP on the VQA-RAD dataset, while PubMedCLIP with the vision transform ViT-32 as the backend shows an improvement of 3% on the SLAKE dataset. See Also: Introduction to Vision Transformers (ViT). PubmedCLIP: Use Case Healthcare professionals can use PubMedCLIP to interpret complex medical images for better diagnosis and patient care. 2. PLIP The Pathology Language-Image Pre-Training (PLIP) model is a CLIP-based framework trained on extensive, high-quality pathological data curated from open social media platforms such as medical Twitter. PLIP: Architecture and Development Researchers used 32 pathology hashtags according to the recommendations of the United States Canadian Academy for Pathology (USCAP) and the Pathology Hashtag Ontology project. The hashtags helped them retrieve relevant tweets containing de-identified pathology images and natural descriptions. The final dataset - OpenPath - comprises 116,504 image-text pairs from Twitter posts, 59,869 image-text pairs from the corresponding replies with the highest likes, and 32,041 additional image-text pairs from the internet and the LAION dataset. OpenPath Dataset Experts use OpenPath to fine-tune CLIP through an image preprocessing pipeline that involves image down-sampling, augmentations, and random cropping. Performance Results of PLIP PLIP achieved state-of-the-art (SoTA) performance across four benchmark datasets. On average, PLIP achieved an F1 score of 0.891, while CLIP scored 0.813. PLIP: Use Case PLIP aims to classify pathological images for multiple medical diagnostic tasks and help retrieve unique pathological cases through image or natural language search. New to medical imaging? Check out ‘Guide to Experiments for Medical Imaging in Machine Learning.’ 3. SigLip SigLip uses a more straightforward sigmoid loss function to optimize the training process instead of a softmax contrastive loss as traditionally used in CLIP. The method boosts training efficiency and allows users to scale the process when developing models using more extensive datasets. SigLip: Architecture and Development Optimizing the contrastive loss function implies maximizing the distance between non-matching image-text pairs while minimizing the distance between matching pairs. However, the method requires text-to-image and image-to-text permutations across all images and text captions. It also involves computing normalization factors to calculate a softmax loss. The approach is computationally expensive and memory-inefficient. Instead, the sigmoid loss simplifies the technique by converting the loss into a binary classification problem by assigning a positive label to matching pairs and negative labels to non-matching combinations. Efficient Loss Implementation In addition, permutations occur on multiple devices, with each device predicting positive and negative labels for each image-text pair. Later, the devices swap the text snippets to re-compute the loss with corresponding images. Performance Results of SigLip Based on the accuracy metric, the sigmoid loss outperforms the softmax loss for smaller batch sizes on the ImageNet dataset. Performance comparison Both losses deteriorate after a specific batch size, with Softmax performing slightly better at substantial batch sizes. SigLip: Use Case SigLip is suitable for training tasks involving extensive datasets. Users can fine-tune SigLip using smaller batch sizes for faster training. 4. StreetCLIP StreetCLIP is an image geolocalization algorithm that fine-tunes CLIP on geolocation data to predict the locations of particular images. The model is available on Hugging Face for further research. StreetCLIP: Architecture and Development The model improves CLIP zero-shot learning capabilities by training a generalized zero-shot learning (GZSL) classifier that classifies seen and unseen images simultaneously during the training process. StreetCLIP Architecture Fine-tuning involves generating synthetic captions for each image, specifying the city, country, and region. The training objective is to correctly predict these three labels for seen and unseen photos by optimizing a GZSL and a vision representation loss. Performance Results of StreetCLIP Compared to CLIP, StreetCLIP has better geolocation prediction accuracy. It outperforms CLIP by 0.3 to 2.4 percentage points on the IM2GPS and IM2GPS3K benchmarks. StreetCLIP: Use Case StreetCLIP is suitable for navigational purposes where users require information on weather, seasons, climate patterns, etc. It will also help intelligence agencies and journalists extract geographical information from crime scenes. 5. FashionCLIP FashionCLIP (F-CLIP) fine-tunes the CLIP model using fashion datasets consisting of apparel images and textual descriptions. The model is available on GitHub and HuggingFace. FashionCLIP: Architecture and Development The researchers trained the model on 700k image-text pairs in the Farfetch inventory dataset and evaluated it on image retrieval and classification tasks. F-CLIP Architecture The evaluation also involved testing for grounding capability. For instance, zero-shot segmentation assessed whether the model understood fashion concepts such as sleeve length, brands, textures, and colors. They also evaluated compositional understanding by creating improbable objects to see if F-CLIP generated appropriate captions. For instance, they see if F-CLIP can generate a caption—a Nike dress—when seeing a picture of a long dress with the Nike symbol. Performance Results of FashionCLIP F-CLIP outperforms CLIP on multiple benchmark datasets for multi-modal retrieval and product classification tasks. For instance, F-CLIP's F1 score for product classification is 0.71 on the F-MNIST dataset, while it is 0.66 for CLIP. FashionCLIP: Use Case Retailers can use F-CLIP to build chatbots for their e-commerce sites to help customers find relevant products based on specific text prompts. The model can also help users build image-generation applications for visualizing new product designs based on textual descriptions. 6. CLIP-RSICD CLIP-RSICD is a fine-tuned version of CLIP trained on the Remote Sensing Image Caption Dataset (RSICD). It is based on Flax, a neural network library for JAX (a Python package for high-end computing). Users can implement the model on a CPU. The model is available on GitHub. CLIP-RSICD: Architecture and Development The RSICD consists of 10,000 images from Google Earth, Baidu Map, MapABC, and Tianditu. Each image has multiple resolutions with five captions. RSICD Dataset Due to the small dataset, the developers implemented augmentation techniques using transforms in Pytorch’s Torchvision package. Transformations included random cropping, random resizing and cropping, color jitter, and random horizontal and vertical flipping. Performance Results of CLIP-RSICD On the RSICD test set, the regular CLIP model had an accuracy of 0.572, while CLIP-RSICD had a 0.883 accuracy score. CLIP-RSICD: Use Case CLIP-RSICD is best for extracting information from satellite images and drone footage. It can also help identify red flags in specific regions to predict natural disasters due to climate change. 7. BioCLIP BioCLIP is a foundation model for the tree of life trained on an extensive biology image dataset to classify biological organisms according to their taxonomy. BioCLIP: Architecture and Development BioCLIP fine-tunes the CLIP framework on a custom-curated dataset—TreeOfLife-10M—comprising 10 million images with 454 thousand taxa in the tree of life. Each taxon corresponds to a single image and describes its kingdom, phylum, class, order, family, genus, and species. Taxonomic Labels The CLIP model takes the taxonomy as a flattened string and matches the description with the correct image by optimizing the contrastive loss function. Researchers also enhance the training process by providing scientific and common names for a particular species to improve generalization performance. This method helps the model recognize a species through a general name used in a common language. Performance Results of BioCLIP On average, BioCLIP boosts accuracy by 18% on zero-shot classification tasks compared to CLIP on ten different biological datasets. BioCLIP: Use Case BioCLIP is ideal for biological research involving VQA tasks where experts quickly want information about specific species. Watch Also: How to Fine Tune Foundation Models to Auto-Label Training Data. 8. CLIPBert CLIPBert is a video and language model that uses the sparse sampling strategy to classify video clips belonging to diverse domains quickly. It uses Bi-directional Encoder Representations from Transformers (BERT) - a large language model (LLM), as its text encoder and ResNet-50 as the visual encoder. CLIPBert: Architecture and Development The model’s sparse sampling method uses only a few sampled clips from a video in each training step to extract visual features through a convolutional neural network (CNN). The strategy improves training speed compared to methods that use full video streams to extract dense features. The model initializes the BERT with weights pre-trained on BookCorpus and English Wikipedia to get word embeddings from textual descriptions of corresponding video clips. CLIPBert Training involves correctly predicting a video’s description by combining each clip’s predictions and comparing them with the ground truth. The researchers used 8 NVIDIA V100 GPUs to train the model on 40 epochs for four days. During inference, the model samples multiple clips and aggregates the prediction for each clip to give a final video-level prediction. Performance Results of CLIPBert CLIPBert outperforms multiple SoTA models on video retrieval and question-answering tasks. For instance, CLIPBert shows a 4% improvement over HERO on video retrieval tasks. CLIPBert: Use Case CLIPBert can help users analyze complex videos and allow them to develop generative AI tools for video content creation. See Also: FastViT: Hybrid Vision Transformer with Structural Reparameterization. . Alternatives to Open AI CLIP: Key Takeaways With frameworks like CLIP and ChatGPT, combining computer vision with NLP is becoming the new norm for developing advanced multi-modal models to solve modern industrial problems. Below are a few critical points to remember regarding CLIP and its alternatives. OpenAI CLIP Benefits: OpenAI CLIP is an excellent choice for general vision-language tasks requiring low domain-specific expertise. Limitations: While CLIP’s zero-shot capability helps users adapt the model to new tasks, it underperforms on fine-grained tasks and out-of-distribution data. Alternatives: Multiple CLIP-based options exist that are suitable for medical image analysis, biological research, geo-localization, fashion, and video understanding.

April 19

8 min